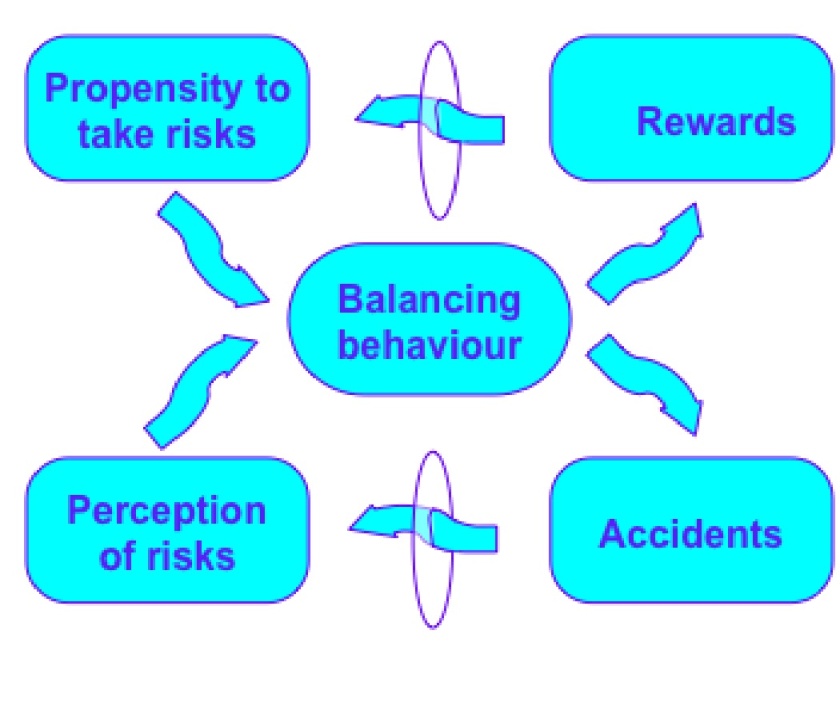

A few weeks ago Richard Gibson from Gartner spoke to members of the CCIO group. It was a fascinating, wide-ranging talk – managing the time effectively was a challenge. Dr Gibson talked about the implications for acute care and long term care of technological innovations – as might be obvious from my previous post here, I have a concern that much of the focus on empowerment via wearables and consumer technology misses the point that the vast bulk of healthcare is acute care and long term care. As Dr Gibson pointed out, at the rate things are going healthcare will be the only economic, social, indeed human activity in years to go

One long term concern I have about connected health approaches is engaging the wide group of clinicians. Groups like the CCIO do a good job (in my experience!) of engaging the already interested, more than likely unabashedly enthusiastic. At the other extreme, there always going to be some resistance to innovation almost on principle. In between, there is a larger group interested but perhaps sceptical.

One occasional response from peers to what I will call “informatics innovations” (to emphasise that this not about ICT but also about care planning and various other approaches that do not depend on “tech” for implementation) is to ask “where is the evidence?” And often this is not a call for empirical studies as such, but for an impossible standard – RCTs!

Now, I advocate for empirical studies of any innovation, and a willingness to admit when things are going wrong based on actual experience rather than theoretical evidence. In education, I strongly support the concept of Best Evidence Medical Education and indeed in following public debates and media coverage about education I personally find it frustrating that there is a sense that educational practice is purely opinion-based.

With innovation, the demand for the kind of RCT based evidence is something of a category error. There is also a wider issue of how “evidence-based” has migrated from healthcare to politics. In Helen Pearson’s Life Project we read how birth cohorts went from ignored, chronically underfunded studies ran by a few eccentrics to celebrated, slightly less underfunded, flagship projects of British epidemiology and sociology. Since the 1990s, they have enjoyed a policy vogue in tandem with a political emphasis on “evidence-based policy.” My own thought on this is that it is one thing to have an evidence base for a specific therapy in medical practice, quite another for a specific intervention in society itself.

I am also reminded of a passage in the closing chapters of Donald Berwick’s Escape Fire (I don’t have a copy of the book to hand so bear with me) which essentially consists of a dialogue between a younger, reforming doctor and an older, traditionally focused doctor. Somewhat in the manner of the Socratic dialogues in which (despite the meaning ascribed now to “Socratic”) Socrates turns out to be correct and his interlocutors wrong, the younger doctor has ready counters for the grumpy arguments of the older one. That is until towards the very end, when in a heartfelt speech the older doctor reveals his concerns not only about the changes of practice but what they mean for their own patients. It is easy to get into a false dichotomy between doctors open to change and those closed to change; often what can be perceived by eager reformers as resistance to change is based on legitimate concern about patient care. There are also concerns about an impersonal approach to medicine. Perhaps ensuring that colleagues know, to as robust a level as innovation allows, that patient care will be improved, is one way through this impasse.

.

.